Why build your own ChatGPT app

There are several reasons that come to mind.:

- OpenAI API models: The ChatGPT web app only supports GPT-4 and GPT-3.5 models. However, the OpenAI API provides access to several additional models that may be of interest. For instance, at the moment, you can use the gpt-4-0125-preview model, which is designed to reduce instances of “laziness.” Additionally, the gpt-4-turbo-preview model is supposed to be faster than GPT-4.

- No usage limits for GPT-4: A ChatGPT Plus subscription has a limit of 40 messages every 3 hours. However, there is essentially no limit for personal use if you access GPT-4 through the API. Of course, you have to pay for using the API. However, for personal use, the costs are probably negligible.

- Your own models: If you are interested in fine-tuning one of OpenAI’s models or incorporating embeddings into your own models, the best approach is to use their API. In one of my upcoming posts, I will demonstrate how to build your own OpenAI-based model using your own data. With your ChatGPT app, you can then interact with your model, which will possess knowledge that the OpenAI model lacks. It’s worth noting that OpenAI will not utilize this data to train its models.

- Your own features: The ChatGPT web app only offers basic features. However, once you become familiar with using the OpenAI API, you can add any feature you desire, or that is required in your organization. With AI’s assistance, you can create your own ChatGPT app in a short time, even if you are not an experienced developer.

How to build your own ChatGPT app

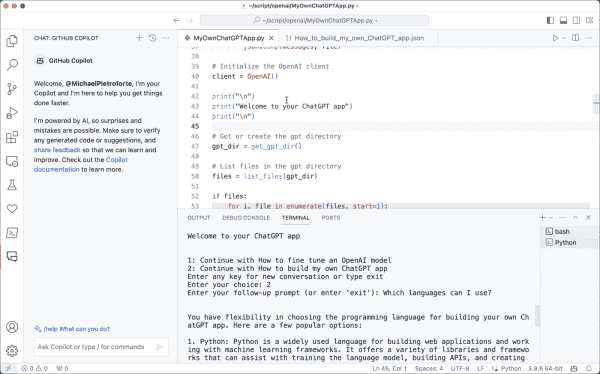

Please take note that the Python script provided below is designed solely to assist you in getting started. The major enhancement to the

former chatbot is that the current application will automatically save conversations as JSON files. This implies that whenever you restart your ChatGPT app, you can carry on with your previous conversations.

For this purpose, the code uses two methods, load_messages and save_messages, to load and preserve conversations. The conversation record is pulled from a file using the load_messages(filename) method, where filename is the path to the file that contains the conversation. This pulled history is saved in the messages list. The messages list is then passed to the chat.completions.create method of the OpenAI API, which uses this history to produce a context-aware response from the GPT model.

<html>

<body>

<p>import os

import json

from openai import OpenAI

def create_directory(directory):

if not os.path.exists(directory):

os.makedirs(directory)

def get_gpt_dir():

script_dir = os.path.dirname(os.path.realpath(__file__))

gpt_dir = os.path.join(script_dir, “gpt”)

create_directory(gpt_dir)

return gpt_dir

def list_files(directory):

files = [f for f in os.listdir(directory) if os.path.isfile(os.path.join(directory, f))]

return files

def safe_filename(prompt):

filename = “”.join([c if c.isalpha() or c.isdigit() else ‘_’ for c in prompt])

return filename.rstrip(‘_’) + ‘.json’

def load_messages(filename):

if os.path.exists(filename):

with open(filename, ‘r’) as file:

return json.load(file)

return []

def save_messages(filename, messages):

with open(filename, ‘w’) as file:

json.dump(messages, file)

client = OpenAI()

print(“Welcome to your ChatGPT app”)

gpt_dir = get_gpt_dir()

files = list_files(gpt_dir)

if files:

for i, file in enumerate(files, start=1):

conversation = file.replace(‘_’, ‘ ‘)

conversation = conversation.replace(‘.json’, ”)

print(f”{i}: Continue with {conversation}”)

print(“Enter any key for new conversation or type exit”)

choice = input(“Enter your choice: “).strip()

if choice.lower() == ‘exit’:

exit()

if choice.isdigit() and 1 <= int(choice) <= len(files):

filename = os.path.join(gpt_dir, files[int(choice) – 1])

messages = load_messages(filename)

else:

print(“Starting a new conversation”)

messages = [{“role”: “system”, “content”: “You give concise answers”}]

if len(messages) == 1:

first_prompt = input(“Enter your first prompt or enter ‘exit’: “)

print(“”)

if first_prompt.lower() == ‘exit’:

exit()

filename = os.path.join(gpt_dir, safe_filename(first_prompt))

messages.append({“role”: “user”, “content”: first_prompt})

completion = client.chat.completions.create(

model=”gpt-3.5-turbo”,

messages=messages

)

first_response = completion.choices[0].message.content

print(first_response + “”)

messages.append({“role”: “assistant”, “content”: first_response})

while True:

user_prompt = input(“Enter your follow-up prompt (or enter ‘exit’): “)

print(“”)

if user_prompt.lower() == ‘exit’:

save_messages(filename, messages)

print(“Conversation saved to”, filename)

break

messages.append({“role”: “user”, “content”: user_prompt})

completion = client.chat.completions.create(

model=”gpt-3.5-turbo”,

messages=messages

)

response = completion.choices[0].message.content

print(response + “”)

messages.append({“role”: “assistant”, “content”: response})</p>

<p>Here’s a breakdown of the script:</p>

<ol>

<li>Import necessary modules: The script starts by importing the os, json, and openai modules.</li>

<li>Define utility functions: Several utility functions are defined for use later in the script:</li>

<ul>

<li>create_directory(directory): Checks if a directory exists, and if not, it creates one.</li>

<li>get_gpt_dir(): Returns the path to a ‘gpt’ directory located in the script’s directory, creating it if necessary.</li>

<li>list_files(directory): Lists all files in a given directory.</li>

<li>safe_filename(prompt): Creates a safe filename from a given prompt by replacing non-alphanumeric characters with underscores and appending a ‘.json’ extension.</li>

<li>load_messages(filename): Loads messages from a JSON file.</li>

<li>save_messages(filename, messages): Saves messages to a JSON file.</li>

</ul>

<li>Initialize the OpenAI client: The OpenAI client is initialized with client = OpenAI().</li>

<li>Get or create the gpt directory: The ‘gpt’ directory is retrieved or created with gpt_dir = get_gpt_dir().</li>

<li>List files in the gpt directory: All files in the ‘gpt’ directory are listed with files = list_files(gpt_dir).</li>

<li>Present existing conversations to the user: If any files exist, they are presented to the user as conversations they can continue.</li>

<li>Prompt the user to make a choice: The user is then prompted to make a choice. If they choose to exit, the script ends. If they choose a number corresponding to a file, that conversation is loaded. If they enter anything else, a new conversation is started with a system message.</li>

<li>Handle the user’s choice: If a new conversation is started, the user is asked for the first prompt. If they choose to exit, the script ends. Otherwise, the prompt is sent to the GPT-3 model, which generates a response. The user’s prompt and the model’s response are then added to the conversation.</li>

<li>Loop for follow-up prompts: The script then enters a loop where it continually asks the user for follow-up prompts. If the user chooses to exit, the conversation is saved to a file, and the script ends. Otherwise, the prompt is sent to the GPT-3 model, which generates a response. The user’s prompt and the model’s response are then added to the conversation, and the loop continues.</li>

</ol>

</body>

</html>

Let us know which features you’d love to see incorporated into the ChatGPT app in the comments below.