This article assumes you already have a working Ceph system in place in optimal condition (no disks or hosts are missing). I am running all commands in this article as root since some are kernel-level modifications. Note that benchmarking and triggering rebalancing can cause a significant load on a cluster.

Upgrading and un-tuning

Over time, the software that underpins Ceph and the algorithms have changed significantly. Constantly upgrading your clients and servers to the latest version (at least the LTS versions) of both Ceph and the operating system is recommended.

Over the past few years, one of the major shifts has been the transition from Filestore to Bluestore for on-disk format. The process of changing the format of your system is extensive. There’s a documentation available on how to perform this change. After we switched to Bluestore, we observed a performance increment of about 20% in our environment.

If you’ve been using previous guides on this subject, consider removing the performance improvement directives from your Ceph configuration file post significant upgrades. You’ll possibly discover that the newly applied default settings provide greater performance.

Benchmarking your setup:

In order to benchmark, I use the provided rados bench command, optimizing it particularly for random workload (a satisfactory depiction of virtual machine workloads).

First, we establish a new storage pool:

ceph osd pool create test pool 128 128

The number 128 pertains to placement groups (PG), which is the system Ceph uses to distribute data. The optimal number of PGs varies and is dependent on factors such as the total capacity and number of objects in your pool. We will discuss this further subsequently.

Following this, I implement a backup of a certain quantity of objects using the command below in a script. Here, $IMAGE is a variable that holds the current name of the image that is being looped:

rbd import --dest-pool testpool --dest /backup/vm/$IMAGE $IMAGE

Before carrying out any tests, it is important to first empty all the file system caches using the rados bench tool.

echo 3 > /proc/sys/vm/drop_caches && sync

rados bench -p testpool 10 write –no-cleanup

rados bench -p testpool 10 seq –no-cleanup

rados bench -p testpool 10 rand –no-cleanup

The no-cleanup command is used in the test to instruct that no clean up should be done but rather, to use the same objects for testing. After completing the tests, you should run the following command:

rados -p testpool cleanup

The benchmark should be adjusted to reflect your workload accurately. My approach is to copy the resulting values in Excel to compare with future benchmarks. Monitoring tools such as Prometheus and Grafana are highly recommended to monitor Ceph and the operating system and hardware so you can find bottlenecks in your setup.

In my case, I will be focusing on these results since they have the most relevance to me:

Bandwidth (MB/sec): 1721.74

Average IOPS: 407

Max latency(s): 0.262508

Min latency(s): 0.0144777

The bandwidth, boasting dual 10Gbps, meets expectations, yet a wide fluctuation in latency highlights room for improvement. This issue most likely originates from my current network infrastructure, which isn’t optimized for shared storage. Regardless of adjustments, one cannot expect to surpass the limit of the underlying hardware capabilities. Generally, hardware and network changes yield more significant enhancements.

CRUSH map tuning

The CRUSH map determines the location of your data within the cluster topology. In this way, Ceph ensures data safety across all failure domains you decide to define (e.g., server, rack, PDU, etc.). Therefore, your cluster’s speed is ultimately limited by the slowest aspect of this topology. Enhancing the CRUSH map can aid in better load distribution for more extensive clusters.

If your Ceph cluster is an upgrade from an older version, additional areas warrant your attention:

ceph osd crush show-tunables

Search for the profile keyword, which should currently be “Jewel,” as well as the “optimal_tunables” keyword, which should be designated as 1. In case you’ve upgraded from a version older than Jewel (v10), the output might still show tuning for a previous version. Ensure ALL your servers AND clients have been upgraded to at least the suggested version by the profile (Jewel) before modifying this setting, as it effectively establishes the lowest required version for your clients:

ceph osd crush tunables optimal

The above modification can potentially lead to considerable data movement in your cluster as Ceph optimizes and readjusts your data placement. Inspect the ceph status to monitor this process, and carry out a benchmark again after all the activity has ceased.

Output of ceph status for a healthy pool

Placement Groups (PGs):

Placement Groups optimize the distribution of data groups across physical disks. As your pool grows, the number of Placement Groups may need to be adjusted. If it isn’t already, you can set auto-scaling on.

ceph osd pool set testpool pg_autoscale_mode on

However, this is still a conservative measure. Auto-scaling only adjusts the number of PGs up to three times the optimal setting.

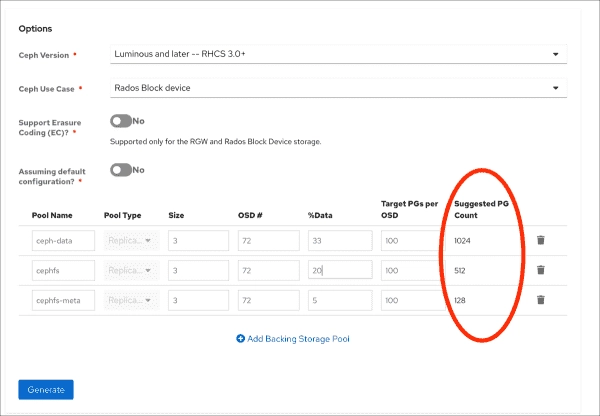

If I use the results from this command:

ceph osd pool autoscale-status

And put them in one of the calculators you can find online. I got this recommendation:

Ceph Placement Group calculator output

For my setup, the recommendation is 1024 at current usage (33%), growing to 2048 when nearing 100% usage. Since I don’t expect lots of growth, I will use 1024. Use this command to adjust the value:

ceph osd pool set testpool pg_num 1024

ceph status

This rebalanced the pool, which took about 60 minutes. I then rechecked my benchmarks:

Bandwidth (MB/sec): 2179.37

Average IOPS: 544

Max latency(s): 0.157076

Min latency(s): 0.0164279

This shows a significant enhancement, while I did see a rise in CPU, bandwidth, and memory use. Optimization frequently requires balancing performance against resource use. PG settings can also influence rebuild times following disk or node failures.

Network setup and traffic schedulers

Ceph, being a network-based storage system, means your network, particularly latency, will have the greatest effect on your performance. Provided your network supports it, configure a larger MTU (Jumbo Packets) and employ a dedicated Ceph network layer.

It’s often advised to expand buffers as a method of boosting bandwidth, yet this oftentimes results in an unfortunate increase in latency and a subsequent decrease in the number of disk operations that can be performed each second (IOPS).

Both computers and network switch equipment have drastically evolved to deliver impressive speed for local area networks. Any current mainstream Linux distro comes with a default buffer and network settings that operate efficiently for networks ranging from 10 to 100Gbps.

A large number of TCP tuning guides, some even specifically written for Ceph, suggest default configurations that are primarily aimed to enhance the performance of obsolete Linux kernels. Modern Linux kernel version 6 has absorbed and improved upon many of these suggestions making the adhering to such guides potentially counterproductive.

After running extensive trials, I’ve arrived at the conclusion that changes in TCP tunables, including the routinely recommended default network scheduler, generate no significant shifts in performance. Considering certain cases, these tunables didn’t bring about an alteration in the averages but rather introduced changes in the deviation between high and low latency, making the system’s performance more unreliable.

When using applications like Ceph, which see minimal demand fluctuation, latency between nodes, and available bandwidth, utilizing CPU cycles to optimize network packets is often futile.

Kernel and hardware tuning

Be cautious of many kernel and system tuning guides available online as they are often outdated or relevant only for older versions of Ceph or the kernel. Hence, I suggest not following these guides.

However, the disk queue scheduler is an exception to this rule. You can check the scheduler for your disks in this way:

cat /sys/block/sda/queue/scheduler

The sda indicates one of the Ceph storage disks. On my system, this would display

none [mq-deadline]

This suggests it is fine-tuned for multi-queue devices, giving priority to read requests. This setting seems the optimal choice for most SAS/SATA devices. However, for Enterprise NVMe storage, it’s advised to set this to none, because such drives have power-safe caches and controllers that can carry out their own optimization. Execute this command for each NVMe disk in your system or loop them in a script, using nvme0n1 as the NVMe drive:

echo "none" > /sys/block/nvme0n1/queue/scheduler

One other impact we did notice makes a change can be found in power savings in the BIOS/UEFI settings. Various manufacturers come with default profiles that optimize for power savings. They conserve power by putting the hardware in a low-power state, but it takes time to wake up the system. I recommend disabling power management when possible or following the manufacturer-recommended settings (if available) for software-defined storage.

Subscribe to 4sysops newsletter!

Power management profile on a Dell server

Power management profile on a Dell server