Chat Completions API

The OpenAI API provides various services and functionalities accessible through different endpoints. These services include but are not limited to, image generation (DALL-E API), text-to-speech conversion (Audio API), and text generation (Chat Completions API).

Today, I will discuss the Chat Completions endpoint that handles interactive and conversational text generation tasks, such as those performed by ChatGPT. This service focuses on generating responses in a chat-like format, where the input is typically a message or a series of messages, and the output is a conversational reply that considers the context provided in the input.

Understanding roles

Grasping the concept of roles is essential when working with the Chat Completions API. The API includes three roles, namely System, User, and Assistant. These roles are specifically created to distinguish the instructional context for the model, inputs from the actual user, and the response from the model.

System Role: This role is utilized to give context or instructions to the model. Such messages lay out parameters or rules on how the AI should behave or reply in the conversation, yet they are not included in the conversational exchange itself. For example, one can establish the tone of AI’s responses (professional, casual) or the level of detail (wordy, concise).

User Role: Messages from the actual human user engaging with the model fall under this category. These inputs can be queries, statements, or responses inputted by the user into the conversation.

Assistant Role: Attributed to messages generated by the model in response to user inputs. When the AI produces a response, it’s under the Assistant Role, indicating the AI’s contribution to the conversation.

Roles and their content are stored in Python dictionaries. Each dictionary contains two keys: “role” and “content.” The entire conversation is made up of a Python list of dictionaries.

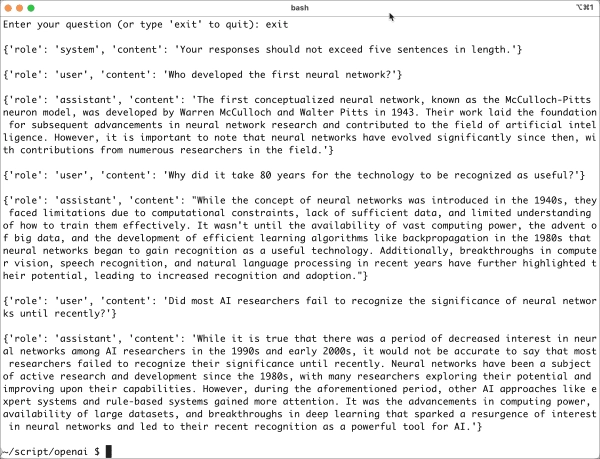

A simple chatbot example

I assume here that you have read my previous post and installed the OpenAI library for Python on your computer. The Python script below demonstrates how to use the roles.

<p>from openai import OpenAI</p>

<p># Initialize the OpenAI client<br/>

client = OpenAI()</p>

<p># Define the System Role<br/>

messages = [

{“role”: “system”, “content”: “Your responses should not exceed five sentences in length.”}

]</p>

<p>while True:<br/>

# Prompt the user for a question<br/>

print(‘r’)<br/>

user_prompt = input(“Enter your question (or type ‘exit’ to quit): “)<br/>

print(‘r’)</p>

<p># Check if the user wants to exit<br/>

if user_prompt.lower() == ‘exit’:<br/>

for message in messages:<br/>

print(message)<br/>

print(‘r’)<br/>

break</p>

<p># Add the user’s question to the messages as a User Role<br/>

messages.append({“role”: “user”, “content”: user_prompt})</p>

<p># Generate a completion using the user’s question<br/>

completion = client.chat.completions.create(

model=”gpt-3.5-turbo”,

messages=messages

)</p>

<p># Get the response and print it<br/>

model_response = completion.choices[0].message.content<br/>

print(model_response)</p>

<p># Add the response to the messages as an Assistant Role<br/>

messages.append({“role”: “assistant”, “content”: model_response})<

</p><p>Save the script to a file named SimpleChatBot.py.</p>

<p>To execute the script on Windows, type this command:</p>

<p>py SimpleChatBot.py</p>

On macOS, you can use this command:

python3 SimpleChatBot.py

It took 80 years for most AI researchers to recognize the significance of neural networks

Here’s a breakdown of the script and the roles:

OpenAI Client Set-Up: The process commences with the importation of the OpenAI library followed by the initiation of the client object. This object is pivotal for the connection with the OpenAI API.

Role of the System: A list titled messages is created with one dictionary. This dictionary has two keys: “role” and “content.” The value of the “role” key is “system.” The “content” key is given the value “Your feedback should not be longer than five sentences.” This sets a standard for the AI’s responses.

Conversation Loop: A while loop is initiated in the script, constantly soliciting user input.

The Part of the User: When the user proposes a question, it is included in the messages list as a User Role. Including the user’s input in the list is essential for enabling meaningful interaction between the user and the model.

Completion method: The client.chat.completions.create method is part of the OpenAI API client. It is used to create a chat completion, a response generated by the model based on a series of messages. The model parameter specifies the AI model to use for generating the completion. In this case, the model is gpt-3.5-turbo, which is one of the various models provided by OpenAI. The messages parameter contains the list of messages of the entire conversation.

Response: The completion variable is the result of a call to the client.chat.completions.create method from the OpenAI API. This result includes a list of choices, each representing a possible completion generated by the AI model. In this case, the code is only interested in the first choice, so it uses completion.choices[0] to access it. Each choice has a message property, which is a dictionary that includes the “role” and “content” of the message. The “content” key contains the actual content of the message, which is what the code is interested in. So, it uses completion.choices[0].message.content to extract the content of the AI-generated message.

Assistant Role: After the AI generates a response, this response is appended to the messages list with the Assistant Role. This role is attributed to messages generated by the model, distinguishing them from the user’s and system’s messages.

Continuation: The loop continues, allowing for a back-and-forth conversation where each new user input and AI response is added to the messages list, preserving the flow and context of the conversation.

Exit: If the user types “exit,” the for loop will print each dictionary in the messages list. The break statement exits the while loop.